And You Thought The Surge Was a Bad Idea

Notes from the New Vermont

Commentary #202: Why, Robot

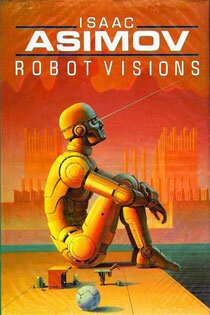

Let me begin by saying that nobody loves robots more than I do. I stumbled onto Isaac Asimov’s I, Robot series when I was twelve, and never looked back.

What made the novels so fascinating is that the robots were always experiencing very human emotional breakdowns, and only a gifted robot psychologist could bring them back under control.

What made the novels so fascinating is that the robots were always experiencing very human emotional breakdowns, and only a gifted robot psychologist could bring them back under control.

Mostly the machines found themselves conflicted over Asimov’s First Law of Robotics: “A robot may not injure a human being or, through inaction, allow a human being to come to harm.”

A very clear moral precept, the First Law.

The catch, of course, is that the Second Law of Robotics calls for robots to immediately obey any command from any human being. You see the problem: in nearly every story, humans put robots in a position where they must choose between morality and obedience.

You could argue that the series had its origins in the experience of World War II and the Nuremberg Trials, in the question of what society can properly ask of its human soldiers.

I’m thinking about Asimov today because of what I read online yesterday: the Pentagon is about to deploy 18 fully-armed robots in Iraq, tiny systems about three feet high, mounted on tank treads, and each carrying an M249 machine gun.

The robots go by the acronym SWORDS, short for “special weapons observation remote reconnaissance direct action system.”

Really, that’s the acronym.

These new robots are based on the same technology as those currently being used to disarm roadside bombs. Both are piloted remotely, using a joystick and a keyboard, and both can achieve speeds up to about 20 miles an hour. The only real difference is that the bomb disposal robots adhere to Asimov’s First Law.

The new SWORDS units, on the other hand, can be modified to fire M40 grenades and even rockets.

Now, with US casualties in Iraq mounting, you might wonder why armed robots have taken so long to come to the war zone. After all, they were declared battle-ready back in 2004. But it turns out there were a few glitches.

First, the units tend to spin wildly out of control, for reasons that remain unclear. Second, soldiers operating the robots occasionally experience a lag-time of up to eight seconds, usually during combat.

In other words, when the robots aren’t firing wildly at anything in a 360 degree radius, they’re prone to silence and unresponsive behavior, like sulky adolescents.

In other words, when the robots aren’t firing wildly at anything in a 360 degree radius, they’re prone to silence and unresponsive behavior, like sulky adolescents.

Of course, the Army found an elegant solution to the problem of runaway robots: a kill switch that disables the unit if it “goes crazy.”

But when you think about it, the kill switch simply leaves the $200,000 unit lying defenseless in the sand.

And that’s the problem with this whole technology: the SWORDS robots move far more slowly than a Humvee, and their remote piloting is far more clumsy. Each SWORDS unit is at a significant disadvantage against a guerrilla insurgency. Unlike Predator drones, which fly far above small arms fire, these new killer robots are actually sitting ducks.

So why is the Army rushing to field them now, when their liabilities still so clearly outweigh their usefulness?

Well, the link I clicked yesterday took me to a website called Quickjump.net, which looks like your average technology/video gaming site, with one exception: it was plastered with ads for something called “The Army Gaming Championships,” a competitive video game tournament sponsored by the U.S. Army and offering over $200,000 in assorted prize money.

In other words, the SWORDS units may or may not achieve results on the battlefield, but they are already being smoothly integrated into the Army’s increasingly brazen pitch to adolescent boys.

And that, more than anything else, is what must have Isaac Asimov, the great moral philosopher of my youth, spinning in his grave.

[This piece aired first on Vermont Public Radio. You can listen to an ]